( 2023 / Head of Audio / Frontier Developments)

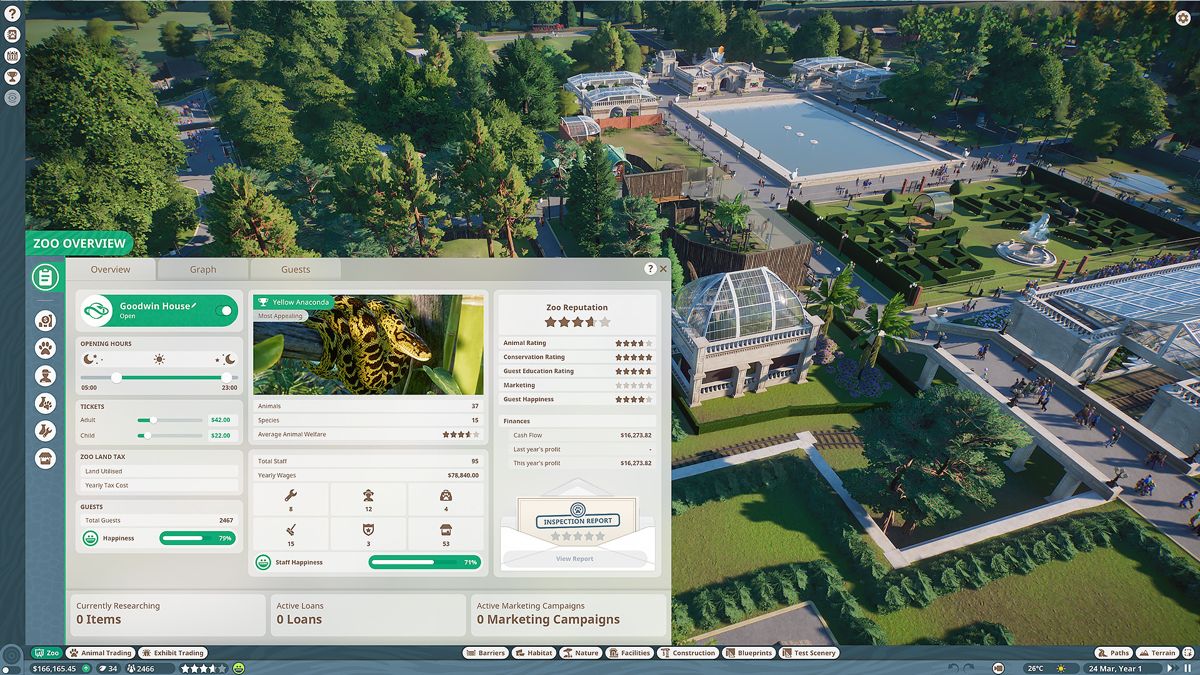

Official Website: Planet Zoo: Console Edition

| [GENRE] | CMS Content Management Simulation |

| [DURATION] | 2017-2024 |

| [ROLE] | Audio Lead |

| [DEVELOPER] | Frontier Developments |

| [PLATFORM] | PC, PS5, XBOX SERIES S, XBOX SERIES X |

Audio Team

Head of Audio Jim Croft, Principal Audio Designer (Lead) Matthew Florianz

Senior Audio Designers Aaron Holland, James Stant, Pablo Canas Llorente

Senior Technical Sound Designer Stephen Hollis

Audio Designer Benjamin Scholey

Additional Sound Design Dylan Vadamootoo, Michael Maidment

Principal Audio Programmer (lead) Will Augar

Senior Audio Programmers Ian Hawkins, Jamie Stuart

Audio Programmer Jon Ashby

Graduate Audio Programmer Hakan Yurdakul

Senior Audio Test Engineer Robbie Chesters

Audio Test Engineer Sam Doyle

Music J.J. Ipsen and Jim Guthrie with Young Mbazo, African Choir director Toya Delazy, African Choir Recording Studio Sunset Recording Studios, African Choir Engineer Jurgen Van Wechmar

Music Supervisor Janesta Boudreau

The challenge of creating a soundscape for Planet Zoo is that animals are usually quiet. While researching and visiting zoo's, our recordings would mostly capture the sound of humans, water features and birds. On one particularly revealing recording, a Rhino was chasing Giraffes and the animals passing close to the camera were kicking up dust while everything looked chaotic, it was still mostly quiet! The footfall, grunts and vocalisations were barely noticeable over the sound of wind and people reacting. Animals tend to be very quiet and don't want to attract attention.

Data provided by the audio tech team, I implemented a wwise game mix that thins out human presence layers so that the sounds of crowds, transport rides and ambiences are below the mix levels of our focused and subtle animal noises. Player relevant feedback, such as direct crowd reactions to animal behaviours, is of course retained in the mix.

Further to the mix change which benefits delicate sound recordings, it was also important to inform players about animal stress levels from human noises. Enclosures need to provide animals with quiet areas so that they retreat too them. Camera originating deferred ray-casts identify quiet areas and thin out crowds and other disturbances even further.

Mixing quiet to be more audible in the mix is Planet Zoo's audio solution for staying close to the experience of a zoo while retaining player relevant information front and center in a mix that is full of interesting sonic detail.

The audio team have classified the rankings of vocalisations and combining this with a code side voice management system the calls are prioritised so that player relevant information is never lost. A hero-emitter is used for specific, important animal calls on park-wide emitters. This produces a background animal soundscape that is informative about real-world animal behaviour and locations. The player then react to what they hear and even if they can't directly see it, they know in what direction to look.

Animals are generally quiet, not wanting to attract attention, but not always so. An animal ambling would be quiet but when they sense food, they do get excited. In both situations described the game would be moving animals around using the same base animation, but the context for audio behaviours is very different.

Audio, ai and animation worked closely together to present the correct types of vocalisations for behaviours. When an animal is spotting food and audio code has identified this as a behaviour, additional layers of "excited" sounds are added. Using wwise call-back's the animation system triggers partials to visualise those vocalisations. Using this system, animals will sound more excited as they walk towards food in such a way that animation did not have to create additional (costly) variations for all animals.

A similar system is also used for chatting between primates or howling wolves. Audio keeps track of multiple animals, comparing their behaviours (and motivations) and adding layers of excitement to trigger the right sounds, crucially also at a believable interval.

Audio triggered animations present a successful and close cross-discipline collaboration with animation, game and ai teams.

© 2023 Frontier Developments, plc. All rights reserved. Frontier and the Frontier Developments logo are trademarks or registered trademarks of Frontier Developments, plc

Elite © 1984 David Braben & Ian Bell. Frontier © 1993 David Braben, Frontier: First Encounters © 1995 David Braben and Elite Dangerous © 1984 - 2023 Frontier Developments Plc. All rights reserved. 'Elite', the Elite logo, the Elite Dangerous logo, 'Frontier' and the Frontier logo are registered trademarks of Frontier Developments plc. Elite Dangerous: Odyssey and Elite Dangerous: Horizons are trademarks of Frontier Developments plc. All rights reserved.

Planet Zoo © 2023 Frontier Developments plc. All rights reserved. Planet Coaster © 2023 Frontier Developments plc. All rights reserved.

Jurassic World Evolution 2 © 2022 Universal City Studios LLC and Amblin Entertainment, Inc. All Rights Reserved. © 2022 Frontier Developments, plc. All rights reserved.

Planet Coaster © 2023 Frontier Developments plc. All rights reserved.

© 2018 Universal Studios and Amblin Entertainment, Inc. Jurassic World, Jurassic World Fallen Kingdom, Jurassic World Evolution and their respective logos are trademarks of Universal Studios and Amblin Entertainment, Inc. Jurassic World and Jurassic World Fallen Kingdom motion pictures ©2015-2018 Universal Studios, Amblin Entertainment, Inc. and Legendary Pictures. Licensed by Universal Studios. All Rights Reserved. 'PS', 'PlayStation', and 'PS4' are registered trademarks or trademarks of Sony Interactive Entertainment Inc. All other trademarks and copyright are acknowledged as the property of their respective owners. This audio show-reel highlights the work of the audio team. It has not been endorsed by Frontier Developments or Universal pictures.

©2014 Sony Computer Entertainment Europe. LittleBigPlanet is a trademark of Sony Computer Entertainment Europe. Developed by Sumo Digital Ltd.

Tearaway™ Unfolded © 2018 Sony Interactive Entertainment Europe. Published by Sony Interactive Entertainment Europe. Developed by Media Molecule. 'Tearaway' is a trademark of Sony Interactive Entertainment Europe. All rights reserved.

F1® Manager 2022 Game - an official product of the FIA FORMULA ONE WORLD CHAMPIONSHIP. © Frontier Developments, PLC. All rights reserved. THE F1 FORMULA 1 LOGO, F1 LOGO, FORMULA 1, F1, FIA FORMULA ONE WORLD CHAMPIONSHIP, GRAND PRIX AND RELATED MARKS ARE TRADE MARKS OF FORMULA ONE LICENSING BV, A FORMULA 1 COMPANY. THE F2 FIA FORMULA 2 CHAMPIONSHIP LOGO, FIA FORMULA 2 CHAMPIONSHIP, FIA FORMULA 2, FORMULA 2, F2 AND RELATED MARKS ARE TRADE MARKS OF THE FEDERATION INTERNATIONALE DE L'AUTOMOBILE AND USED EXCLUSIVELY UNDER LICENCE. ALL RIGHTS RESERVED. THE F3 FIA FORMULA 3 CHAMPIONSHIP LOGO, FIA FORMULA 3 CHAMPIONSHIP, FIA FORMULA 3, FORMULA 3, F3 AND RELATED MARKS ARE TRADE MARKS OF THE FEDERATION INTERNATIONALE DE L'AUTOMOBILE AND USED EXCLUSIVELY UNDER LICENCE. ALL RIGHTS RESERVED.

© Sony Interactive Entertainment LLC. "PlayStation Family Mark", "PlayStation", "PS5 Logo", "PS5", "PS4 Logo", "PS4", "PlayStation Shapes Logo" and "Play Has No Limits" are registered trademarks of Sony Interactive Entertainment Inc.

All other trademarks and copyright are acknowledged as the property of their respective owners.